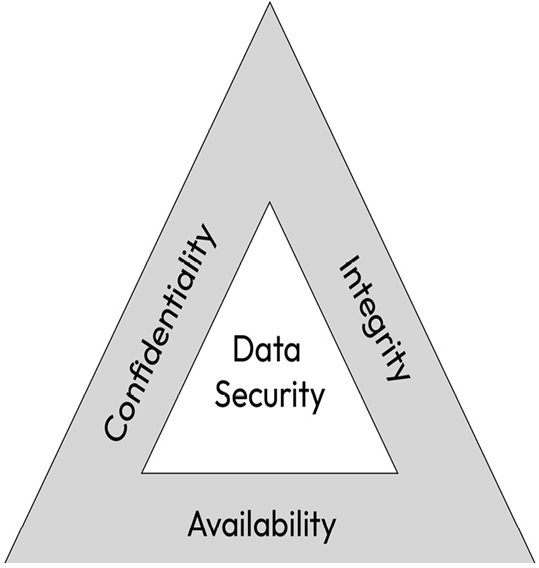

Not to be confused with the central intelligence agency of the same acronym, CIA stands for confidentiality, integrity, and availability. It is a widely popular information security model that helps an organization protect its sensitive critical information and assets from unauthorized access:

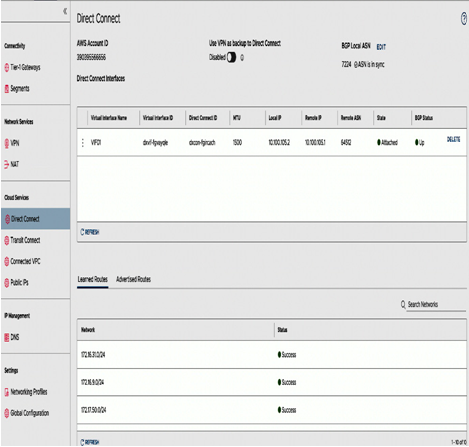

Figure 1.6 – The CIA triad (https://devopedia.org/images/article/178/8179.1558871715.png)

The preceding diagram depicts the CIA triad. Let’s understand its attributes in detail.

Confidentiality

Confidentiality ensures that sensitive information is kept private and accessible only to authorized individuals. This attribute focuses on keeping sensitive information private and accessible only to authorized individuals or entities. It aims to prevent unauthorized disclosure of information, protecting it from being accessed or viewed by unauthorized users. Let’s understand this by looking at an example of the payroll system of an organization. The confidentiality aspect of the payroll system ensures that employee salary information, tax details, and other sensitive financial data is kept private and accessible only to authorized personnel. Unauthorized access to such information can lead to privacy breaches, identity theft, or financial fraud.

Integrity

Integrity maintains the accuracy and trustworthiness of data by preventing unauthorized modifications. The integrity aspect ensures that information remains accurate, trustworthy, and unaltered. It safeguards against unauthorized modifications, deletions, or data tampering efforts, ensuring that the information’s integrity is maintained throughout its life cycle. Let’s understand integrity using the same example of the payroll system of an organization. The integrity aspect of the payroll system ensures that the data remains accurate and unchanged throughout its life cycle. Any unauthorized modifications to payroll data could lead to incorrect salary payments, tax discrepancies, or compliance issues.

Availability

Availability ensures that information and services are accessible and operational when needed without disruptions. This aspect emphasizes ensuring that information and systems are available and operational when needed. It focuses on preventing disruptions or denial of service, ensuring that authorized users can access the information and services they require without interruptions. Let’s understand availability by using the same example of the payroll system of an organization. The availability aspect of the payroll system ensures that it is accessible and functional when needed. Payroll processing is critical for employee satisfaction and business operations, and any disruptions to the system could result in delayed payments or other financial issues.

Overall, the CIA triad provides a framework for organizations to develop effective cybersecurity strategies. By focusing on confidentiality, integrity, and availability, organizations can ensure that their systems and data are protected from a wide range of threats, including cyberattacks, data breaches, and other security incidents.