When preparing for the deployment of your first SDDC, you need to collect the configuration data in advance. The settings ideally should be captured at the design stage, as discussed in the previous chapter.

The following table depicts the configuration items you need to provide to successfully deploy your first SDDC:

| Configuration section | Configuration item | Description |

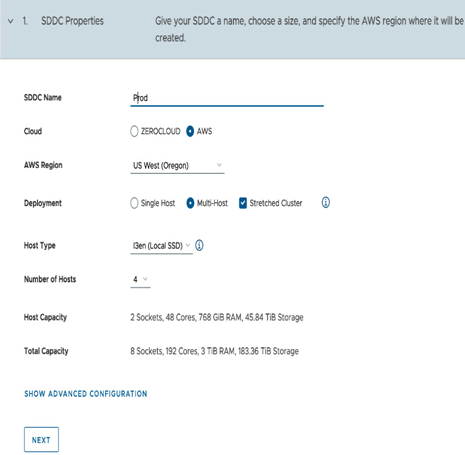

| SDDC (see Figure 12.3 for details) | Name | Free text field. You can change the name after the deployment as well. It is recommended to use the company naming convention. |

| AWS Region | AWS Region where your SDDC resides. The Region should fit your subscription, AWS VPC configuration, and AWS DX configuration (if in use). | |

| Deployment | Single host – for POC only, for 60 days only. Multi-host – production deployment. Stretched cluster – a deployment across two AWS AZs. | |

| Host type | Select one of the available host types. The host type should fit into your subscription, design, and workload requirements. You have a choice between: i3.metali3en.metalI4i.metal See Figure 12.4 for the deployment wizard where the host type is specified. VMware constantly adds new instances. Check the VMware documentation for the available instances. | |

| Number of hosts | Count of ESXi hosts in your first cluster. If your design requires a multi-cluster setup, you will add additional clusters after the SDDC is provisioned with the first cluster. | |

| AWS Connection (see Figure 12.2 for details) | AWS account | This is an AWS account you own. Choose the account according to the design and security requirements. |

| Choose a VPC | Select an AWS VPC (the VPC should be precreated) in your AWS account. This VPC will become a connected VPC after the deployment. | |

| Choose subnet(s) | Select a subnet in your VPC (the subnet must be precreated). The subnet must have enough free IPs for the SDDC deployment (to accommodate ESXi hosts’ ENI interfaces). The subnet also defines the destination AZ. You cannot change the subnet after the deployment. If you deploy a stretched cluster SDDC, you must select two subnets in two different AZs. | |

| SDDC networking | Provide the management subnet CIDR | You should provide a private network subnet with enough IP addresses for the SDDC management (vCenter, ESXi hosts, vSAN network, etc.). It is recommended to use a /23 subnet if you plan to deploy more than 10 hosts. You cannot change the subnet after the deployment. Make sure the subnet does not overlap with the on-premises or other connected networks (including AWS). |

Table 12.1 – SDDC Configuration Details

You can review the deployment wizard in Figure 12.3:

Figure 12.3 – SDDC deployment wizard SDDC Properties

You can review the VPC and subnet details of the SDDC wizard in Figure 12.4:

Figure 12.4 – SDDC deployment wizard. AWS VPC and subnet

After you have provisioned the SDDC, you must configure access to the vSphere Web Client to manage your SDDC through VMware vCenter Server. You will use the NSX manager UI to create a Management Gateway Firewall Rule. By default, access to vCenter is not allowed. You will specify an IP or a subnet and entitle it to access vCenter. An “allow all” rule is not possible.