The primary goal of a landing zone is to ensure consistent deployment and governance across various environments, such as production (Prod), quality assurance (QA), user acceptance testing (UAT), and development (Dev). Let us understand the core concepts associated with landing zones:

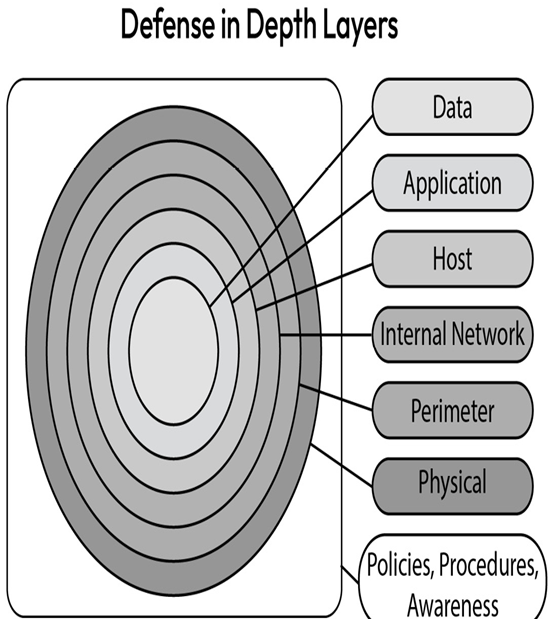

- Network segmentation: Network segmentation is a critical aspect of a landing zone architecture, and it involves dividing the cloud environment into distinct network segments to ensure isolation and security between different environments and workloads. Each environment (Prod, QA, UAT, and Dev) has a dedicated network segment. These segments are logically separated to prevent unauthorized access between environments. Network segmentation ensures that activities in one environment do not impact others and that sensitive data is adequately protected.

- Isolation of environments: The network segments for each environment are isolated from each other to minimize the risk of data breaches or unauthorized access. This can be achieved through various means, such as Virtual Private Clouds (VPCs) in AWS, Virtual Networks (VNets) in Azure, or VPCs in GCP.

- Connectivity between environments: While isolation is crucial, there are specific scenarios where controlled connectivity is required between environments, such as data migration or application integration. This connectivity should be strictly controlled and monitored to avoid security risks.

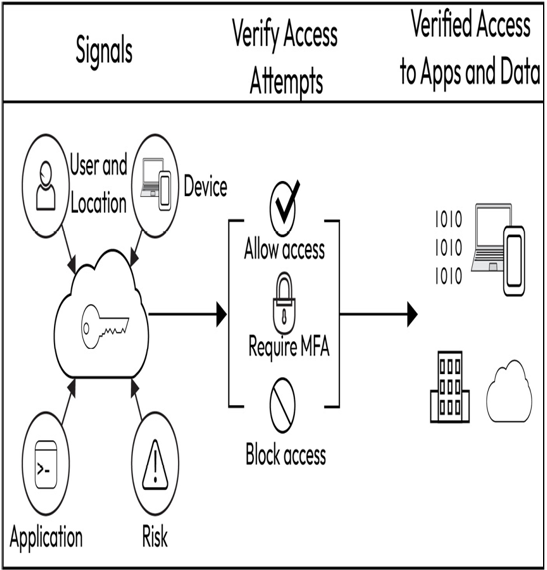

- Identity and access management (IAM): IAM policies and roles are implemented to regulate access to cloud resources within each environment. This ensures that only authorized users have access to specific resources based on their roles and responsibilities.

- Security measures: Each landing zone environment should have security measures, including firewall rules, security groups, network access control lists (NACLs), and other security-related settings. This helps safeguard resources and data from potential threats.

- Centralized governance: A landing zone architecture also implements centralized governance and monitoring to maintain consistency, compliance, and visibility across all environments. This involves using a central management account or a shared services account for common services.

- Resource isolation: Within each environment, further resource isolation can be achieved by using resource groups (Azure), projects (GCP), or organizational units (AWS) to logically group resources and manage access control more effectively.

- Monitoring and auditing: To maintain the health and security of the landing zone, comprehensive monitoring and auditing practices should be implemented. This includes monitoring for suspicious activities, resource utilization, and compliance adherence.

Overall, a landing zone architecture provides a solid foundation for an organization’s cloud deployment by enforcing security, governance, and network segmentation across different environments. This architecture is cloud provider-agnostic and can be adapted to various cloud platforms such as Azure, AWS, and GCP while following their respective best practices and services. To read more about it, you can search for Cloud Adoption Framework, followed by the cloud provider’s name, via your favorite search engine – you will get plenty of resources.

Summary

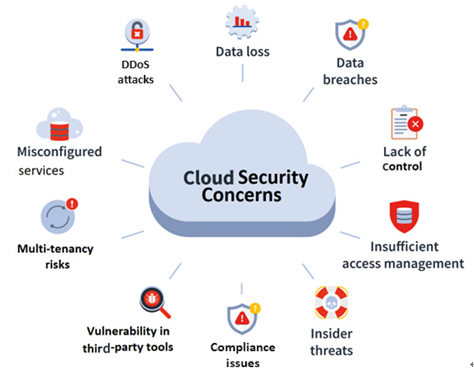

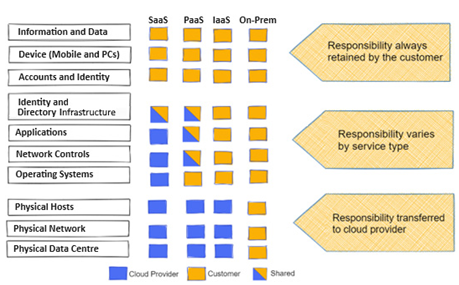

Cloud security is an interesting topic and fun to learn. I hope you enjoyed it as much as I enjoyed writing some of these fundamental concepts. In this chapter, we introduced you to some important security and compliance concepts. This included shared responsibility in cloud security, encryption and its relevance in a cloud environment, compliance concepts, the Zero Trust model and its foundational pillars, and some of the most important topics related to cryptography. Finally, you were introduced to CAF and landing zones. All the terms and concepts discussed in this chapter will be referred to throughout this book. I encourage you to deep dive into these topics as much as you can.

In the next chapter, we will learn about cloud security posture management (CSPM) and the important concepts around it. Happy learning!

Further reading

To learn more about the topics that were covered in this chapter, look at the following resources:

- Certificate of cloud security knowledge: https://cloudsecurityalliance.org/education/ccsk/

- CIS benchmarks list: https://www.cisecurity.org/cis-benchmarks

- Shared responsibility model: https://aws.amazon.com/compliance/shared-responsibility-model/